CloudFormation example for AWS CodePipeline - Hugo Deployment

I recently blogged on how you can use AWS CodePipeline to automatically deploy your Hugo website to AWS S3 and promised a CloudFormation template, so here we go. You can find the full template in this GitHub repo.

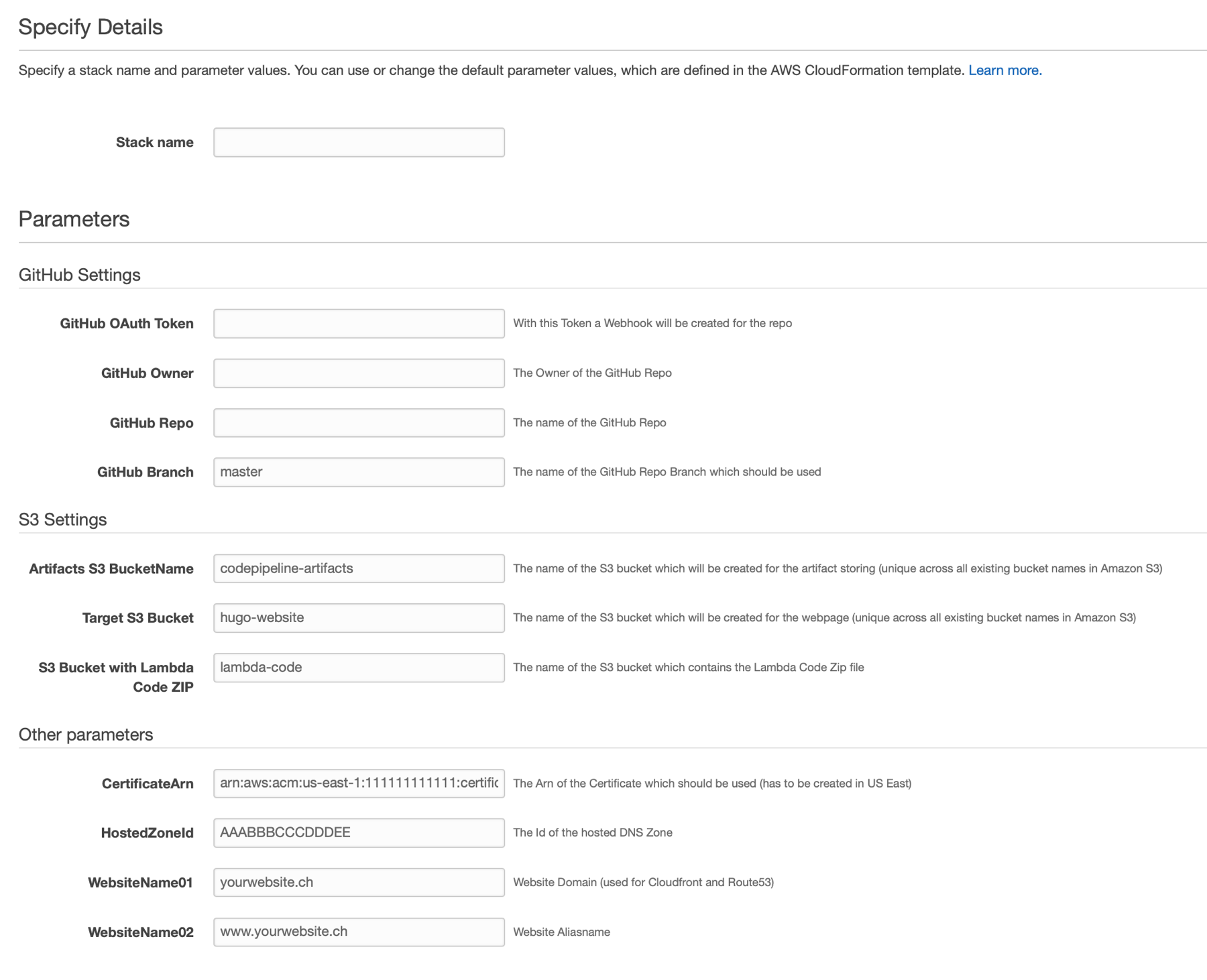

If you create a new stack with the template you will be asked for following parameters, let’s look at them in detail:

|

Important

|

The referenced GitHub Repo has to be your Repo with the Hugo source files and the in the previous blog post mentioned buildspec.yml file which has to be in this repo as well |

Needed parameters

-

GitHub OAuth Token → The Token which will be used to create the webhook in the Repo

-

GitHub Owner → The owner of the GitHub Repo

-

GitHub Repo → The name of the GitHub Repo

-

GitHub Branch → The name of the Branch

-

Artifacts S3 BucketName → The name of the S3 bucket where CodePipeline Artifacts will be saved, this bucket will be created!

-

Target S3 Bucket → The name of the S3 bucket where your Hugo Website will be deployed, this bucket will be created!

-

S3 Bucket with Lambda Code ZIP → The existing S3 bucket which contains the ZIP file of the python script for the CloudFront invalidation. The file has to be named invalidateCloudFront.zip and can be found here

-

CertificateArn → The Arn of the Certificate which should be used on CloudFront Distribution (has to be created in US East!)

|

Note

|

I tried to generate the certificate with the Template as well but unfortunately there is no easy way doing this → Looks like Terraform offers this functionality, think I will have a look at Terraform soon |

-

HostedZoneId → The Id of the hosted Zone on Route53, will be used to create the following 2 subdomains/ WebsiteNames

-

WebsiteName01 → subdomain1 of the HostedZone

-

WebsiteName02 → subdomain2 of the HostedZone

Created AWS Resources

If you create a Stack out of this Template following resources will be created automatically:

-

PipelineArtifactsBucket → AWS::S3::Bucket Artifacts S3 BucketName

-

PipelineWebpageBucket → AWS::S3::Bucket Target S3 Bucket

-

BucketPolicy → AWS::S3::BucketPolicy which will be used for the S3 Bucket with the Hugo source files and allows PublicRead access

-

myCloudfrontDist → AWS::CloudFront::Distribution for the following subdomain names

-

domainDNSRecord1 → AWS::Route53::RecordSet WebsiteName01

-

domainDNSRecord2 → AWS::Route53::RecordSet WebsiteName02

-

CodeBuildProject → AWS::CodeBuild::Project, the actual build project which will be used in the CodePipeline

-

CodePipeline → AWS::CodePipeline::Pipeline

-

GithubWebhook → AWS::CodePipeline::Webhook

-

CreateCodePipelinePolicy → AWS::IAM::ManagedPolicy, the managed policy which will be used for the according role/pipeline

-

CodePipelineRole → AWS::IAM::Role with managed policy for CodePipeline

-

CreateCodeBuildPolicy → AWS::IAM::ManagedPolicy the managed policy which will be used for the according role for CodeBuild

-

CodeBuildRole → AWS::IAM::Role with managed policy for CodeBuild

-

CreateLambdaExecutionPolicy → AWS::IAM::ManagedPolicy

-

LambdaExecutedRole → AWS::IAM::Role with managed policy to give Lambda enough rights

-

LambdaCloudfrontInvalidation → AWS::Lambda::Function python function

Code examples

Throughout the Template I tried to follow the principle of least privilege. I.e. if you look at the CodeBuild Policy you see that CodeBuild is only allowed to work with the created S3 buckets.

108 CreateCodeBuildPolicy:

109 Type: AWS::IAM::ManagedPolicy

110 Properties:

111 ManagedPolicyName: CodeBuildAccess_Hugo

112 Description: "Policy for access to logs and Hugo S3 Buckets"

113 Path: "/"

114 PolicyDocument:

115 Version: "2012-10-17"

116 Statement:

117 - Sid: VisualEditor0

118 Effect: Allow

119 Action: s3:*

120 Resource: [

121 !Join [ '', ['arn:aws:s3:::',!Ref TargetS3Bucket] ],

122 !Join [ '', ['arn:aws:s3:::',!Ref TargetS3Bucket, '/*'] ],

123 !Join [ '', ['arn:aws:s3:::',!Ref ArtifactsBucketName] ],

124 !Join [ '', ['arn:aws:s3:::',!Ref ArtifactsBucketName, '/*'] ]

125 ]

126 - Sid: VisualEditor1

127 Effect: Allow

128 Action: logs:*

129 Resource: '*'Following part creates the CodePipeline with all stages

(Source from GitHub, Build on CodeBuild, Deploy to S3 and call Lambda function)

108 CodePipeline:

109 Type: AWS::CodePipeline::Pipeline

110 Properties:

111 Name: PipelineForStaticWebpageWithHugo

112 ArtifactStore:

113 Type: S3

114 Location: !Ref PipelineArtifactsBucket

115 RestartExecutionOnUpdate: true

116 RoleArn: !GetAtt CodePipelineRole.Arn

117 Stages:

118 - Name: Source

119 Actions:

120 - Name: Source

121 InputArtifacts: []

122 ActionTypeId:

123 Category: Source

124 Owner: ThirdParty

125 Version: 1

126 Provider: GitHub

127 OutputArtifacts:

128 - Name: SourceCode

129 Configuration:

130 Owner: !Ref GitHubOwner

131 Repo: !Ref GitHubRepo

132 Branch: !Ref GitHubBranch

133 PollForSourceChanges: false

134 OAuthToken: !Ref GitHubOAuthToken

135 RunOrder: 1

136 - Name: Build

137 Actions:

138 - Name: CodeBuild

139 ActionTypeId:

140 Category: Build

141 Owner: AWS

142 Provider: CodeBuild

143 Version: '1'

144 InputArtifacts:

145 - Name: SourceCode

146 OutputArtifacts:

147 - Name: PublicFiles

148 Configuration:

149 ProjectName: !Ref CodeBuildProject

150 RunOrder: 1

151 - Name: Deploy

152 Actions:

153 - Name: S3Deploy

154 ActionTypeId:

155 Category: Deploy

156 Owner: AWS

157 Provider: S3

158 Version: '1'

159 InputArtifacts:

160 - Name: PublicFiles

161 Configuration:

162 BucketName: !Ref TargetS3Bucket

163 Extract: 'true'

164 RunOrder: 1

165 - Name: LambdaDeploy

166 ActionTypeId:

167 Category: Invoke

168 Owner: AWS

169 Provider: Lambda

170 Version: '1'

171 Configuration:

172 FunctionName: invalidateCloudfront

173 UserParameters: !Ref myCloudfrontDist

174 RunOrder: 2This is the Lambda function written in python to create the CloudFront invalidation. I needed quiet some time to get the CodePipeline jobId and to get the Id of the CloudFront Distribution out of the UserParameters.

108import time

109import logging

110from botocore.exceptions import ClientError

111import boto3

112

113LOGGER = logging.getLogger()

114LOGGER.setLevel(logging.INFO)

115

116def codepipeline_success(job_id):

117 """

118 Puts CodePipeline Success Result

119 """

120 try:

121 codepipeline = boto3.client('codepipeline')

122 codepipeline.put_job_success_result(jobId=job_id)

123 LOGGER.info('===SUCCESS===')

124 return True

125 except ClientError as err:

126 LOGGER.error("Failed to PutJobSuccessResult for CodePipeline!\n%s", err)

127 return False

128

129def codepipeline_failure(job_id, message):

130 try:

131 codepipeline = boto3.client('codepipeline')

132 codepipeline.put_job_failure_result(

133 jobId=job_id,

134 failureDetails={'type': 'JobFailed', 'message': message}

135 )

136 LOGGER.info('===FAILURE===')

137 return True

138 except ClientError as err:

139 LOGGER.error("Failed to PutJobFailureResult for CodePipeline!\n%s", err)

140 return False

141

142

143def lambda_handler(event, context):

144 LOGGER.info(event)

145 try:

146 job_id = event['CodePipeline.job']['id']

147 distId = event['CodePipeline.job']['data']['actionConfiguration']['configuration']['UserParameters']

148 client = boto3.client('cloudfront')

149 invalidation = client.create_invalidation(DistributionId=distId,

150 InvalidationBatch={

151 'Paths': {

152 'Quantity': 1,

153 'Items': ['/*']

154 },

155 'CallerReference': str(time.time())

156 })

157 codepipeline_success(job_id)

158

159 except KeyError as err:

160 LOGGER.error("Could not retrieve CodePipeline Job ID!\n%s", err)

161 return False

162 codepipeline_failure(job_id, err)Hope this Template helps you on building your own CodePipelines via CloudFormations.